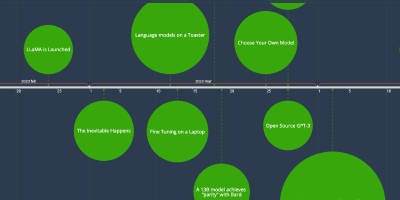

28 mar 2023 año - Open Source GPT-3

Descripción:

Cerebras (not to be confused with our own Cerebra) trains the GPT-3 architecture using the optimal compute schedule implied by Chinchilla, and the optimal scaling implied by μ-parameterization. This outperforms existing GPT-3 clones by a wide margin, and represents the first confirmed use of μ-parameterization “in the wild”. These models are trained from scratch, meaning the community is no longer dependent on LLaMA.Añadido al timeline:

fecha:

28 mar 2023 año

Ahora mismo

~ 2 years and 2 months ago